For those interested in the kernel language, you can look at the referenced paper here: https://tianshilei.me/wp-content/uploads/llvm-hpc-2023.pdf

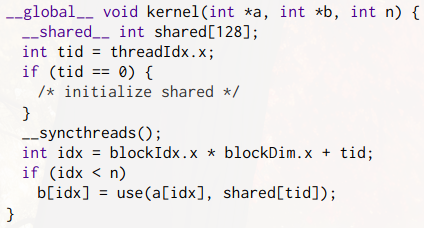

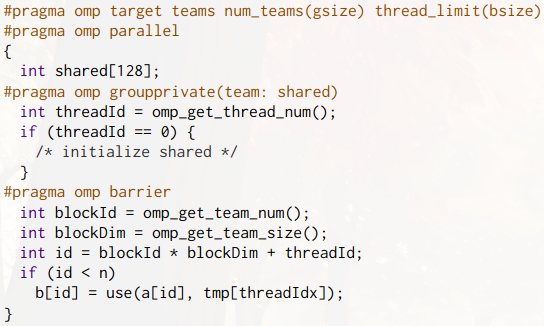

(left is some CUDA code, and right is the SIMT OpenMP equivalent)

They seem to have implemented equivalents of most of what you’d like to see when you come from the CUDA/HIP world: similar 3D thread indexing (with things like ompx_thread_id_x or ompx::thread_id(ompx::DIM_X)), shared/local memory, explicit allocation/memcpy (omp_target_alloc and omp_target_memcpy), synchronization barriers (ompx_sync_thread_block and ompx_sync_warp), warp/wavefront intrinsics etc…

What’s impressive and very encouraging is that ompx achieves performance that is very similar to CUDA on the A100 and sometimes even better performance than HIP on the MI250:

This is the best summary I could come up with:

Merged to LLVM 18 Git yesterday was the initial support for the OpenMP kernel language, an effort around having performance portable GPU codes as an alternative to the likes of the proprietary CUDA.

This work is a set of extensions to LLVM OpenMP coming out of researchers at Stony Brook University and the Lawrence Livermore National Laboratory (LLNL).

We ported six established CUDA proxy and benchmark applications and evaluated their performance on both AMD and NVIDIA platforms.

By comparing with native versions (HIP and CUDA), our results show that OpenMP, augmented with our extensions, can not only match but also in some cases exceed the performance of kernel languages, thereby offering performance portability with minimal effort from application developers."

Ultimately the hope is these extensions will ease the transition from kernel languages like CUDA to OpenMP in a portable and cross-vendor manner.

As outlined in the aforelinked research paper, the early proof-of-concept performance results have been very promising compared to NVIDIA CUDA and AMD HIP.

The original article contains 404 words, the summary contains 165 words. Saved 59%. I’m a bot and I’m open source!